Note: I originally posted this on Medium, and ported here afterward.

For any infrastructure of significant size, you’re going to have an overwhelming amount of data produced. This data can come from any number of sources: Log files, CPU/disk performance, traffic/app load, etc. Monitoring and making sense of this data is critical to maintaining a happy system, and it certainly isn’t a trivial task.

This is a great use case for Splunk. Splunk gives us the ability to ingest large amounts of data from our machines, pulling it into a single platform where it is parsed and indexed. Once indexed, Splunk allows us to intelligently search, analyze, and visualize the data using the tools provided by the platform. Splunk also provides the ability to create alerts based on certain thresholds or trends in the data, which is critical for staying on top of metrics and for spotting issues as soon as (or even before) they occur.

External scripts as data inputs

One of the features that Splunk touts is the ability to pull in data from an external script, which essentially gives you the capability to generate data from any arbitrary source. There are some pitfalls, however, when using external scripts as data inputs, and it took a bit of wranglin’ to track down where I was going wrong.

A real-life example

For this example we will be using the Dark Sky API, which powers the Forecast.io weather website. You will need an API key, but it’s freely-available from the Forecast.io developer website. We’ll also be using the Forecast.io Python library, via pip:

pip install python-forecastio

I’ll assume that the reader is familiar with how to set up and use virtual environments in Python, and therefore will not go into any more detail about installing or using Python packages.

The Python script

We’re going to add this script to the general Splunk scripts directory (not associated with any particular app). This directory is located at $SPLUNK_HOME/bin/scripts/.

Create and edit a file by the name of forecast.py in the above directory, and ensure that it has the following contents (be sure to input your API key):

#!/usr/bin/env python

import datetime

import forecastio

import json

# Lat/Long for Alcatraz.

lat = 37.8267

lng = -122.423

api_key = "[YOUR API KEY HERE]"

data = forecastio.load_forecast(api_key, lat, lng).currently().d

data['datetime'] = datetime.datetime.now().isoformat()

print json.dumps(data, indent=4)

This script uses your API key and the supplied lat/long coordinates to retrieve a forecast for the given location. Splunk will read anything our script outputs to stdout, so we simply use the print statement to display the JSON payload we want Splunk to pick up.

Note that our Python script also adds a datetime field in ISO 8601 format to the JSON payload; this will make it easier for Splunk to index our returned data with the correct time.

Problem 1: Splunk’s bundled Python interpreter

Here’s where we run into our first issue. If we were to simply add this script as a Data Input in Splunk, it would fail to run when called. This is because Splunk will use its own internally-bundled Python interpreter and libraries when running the script.

We will need to create a wrapper script that will call our Python script, using the correct interpreter and paths. In addition, we will need to unset the $LD_LIBRARY_PATH environment variable, or else your Python instance will still be using Splunk’s bundled libraries.

Create and edit a file by the name of wrapper.sh in the same place you created forecast.py, and ensure that it has the following contents (be sure to update the paths to match your system):

#!/bin/bash

set -e

unset LD_LIBRARY_PATH

export PYTHONPATH=/path/to/your/dist-packages/dir

python $SPLUNK_HOME/splunk/bin/scripts/forecast.py

Takeaway 1: Unless your Python script is so basic that it does not need anything outside of the standard library, use a wrapper script.

Problem 2: External script ownership

Splunk Enterprise will run scripts and jobs as whichever system user is the owner of $SPLUNK_HOME. I initially noticed that the splunkd service was running as the root user, and mistakenly assumed that my scripts must also be owned by root.

To compound my troubles, Splunk did not give me any insight into the failure when attempting to run a script as the wrong user; there was nothing in the logs or job results to alert me to this fact. The data input simply failed to produce any events, and Splunk was silent on any failed attempts to run the script. It was purely a guess on my part about script ownership which led me to discover this.

Once I was able to track this down, I searched high and low through the Splunk config files and settings menus, trying to figure out where Splunk had been told to run scripts and jobs as a particular user. With no luck, I turned to Google. Only then was I able to find the answer, buried in a documentation article on Splunk Enterprise Installation.

Takeaway 2: Be sure that your script is owned by the same system user that owns the

$SPLUNK_HOMEdirectory.

Adding the script as a data input in Splunk

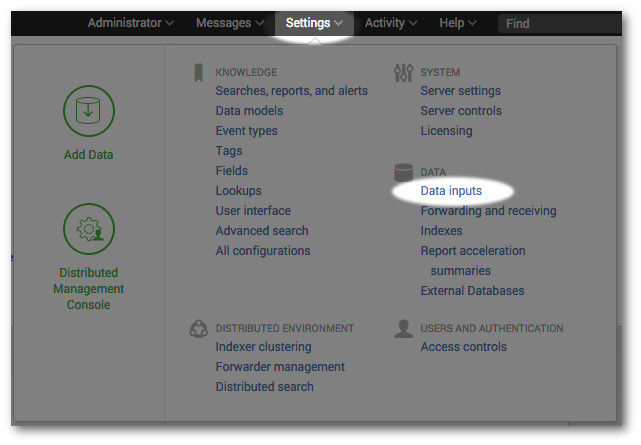

Okay, so we’ve got our shiny new Python script and we’ve got our handy-dandy wrapper script ready to go. Let’s create a new Data Input in Splunk for our script. As the administrator, go to Settings and click on Data Inputs:

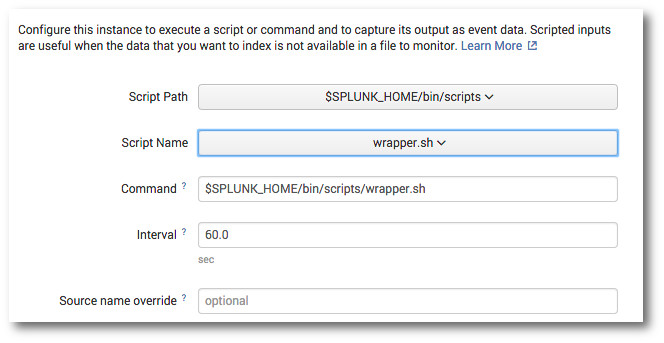

There are two types of inputs: Local Inputs and Forwarded Inputs. In this example, we are going to add a script to the server running the Splunk indexer, so we’ll click on Script in the Local Inputs section. Click the New button to add a new data input. You will be presented with the following screen:

Make sure to select $SPLUNK_HOME/bin/scripts as the script path, and pick our wrapper.sh script as the script to be run. You can ignore the Command and Source name override fields.

The default time interval is 60 seconds. This means that Splunk will attempt to run your script every 60 seconds, parsing and indexing any data returned from the script. Click Next to display the screen where we will configure the input type:

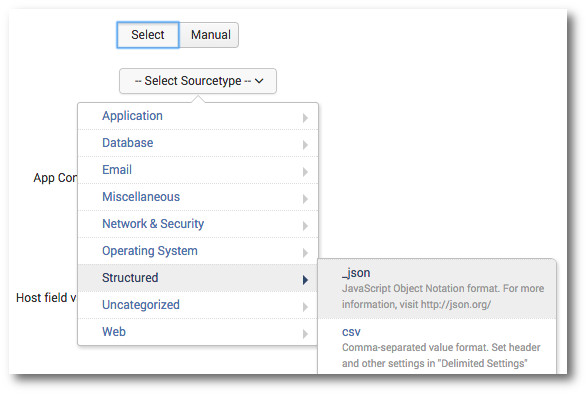

Click on Select Sourcetype, highlight Structured, and choose _json, since we are outputting JSON from our Python script. If you have a specific app that you would like to associate this data with, or a custom index to store the data in, choose the appropriate values on this page. Otherwise, you can leave the rest of the options at their default values. Click the Review button to continue to the next page. If everything looks correct, click the Submit button.

Congratulations!

That’s it! Splunk should now begin running your script and indexing data from it! You can search for all events from your new Data Input by using the following Splunk search query (replacing [PATH] with your $SPLUNK_HOME value):

source="[PATH]/bin/scripts/wrapper.sh"